combination of

"Computer Architecture and Amdahl's Law"

by Gene M. Amdahl

and

"An Interview with Gene M. Amdahl"

of Gene M. Amdahl by William S. Andersonfocusing on the startup and operation of Amdahl and Trilogy corporations

individual articles from "IEEE Solid-State Circuits Society News"

Summer 2007

CONTENTS

My educational background has never included any training in the field of computing, so all of my design activities have been based on my experience and the necessity of solving current problems. Consequently, my computer architecture contributions will largely be autobiographical.

Farm, one room grade school

College, Navy, Marriage, College

Returning to

SDSC in 1947, I selected physics as

my major.

When I

was due to graduate in June 1948, I

applied to several graduate schools

to study Theoretical Physics. I was

accepted at the University of Wisconsin at Madison. At the University of Wisconsin

University of Wisconsin

and summer job using EDVAC

Back at Wisconsin, design a better computer

with drum memory

Each arithmetic operation and

others took one drum revolution to

be certain the instruction calling

for it to be acquired, then a second

revolution to be certain the

operands were acquired, then a

revolution to perform the operation, and finally a revolution to be

certain the result had been stored.

Since the operations were nonconflicting, there were four

instructions in the pipeline at all

times, one picking tip its instruction, one picking up its operands,

one performing its operation and

one storing its result. Consequently the computer performed one

floating point operation per drum

revolution. I believe there were

several world firsts in that design,

the first electronic computer to

have floating point arithmetic (and

certainly the first to have only

floating point arithmetic), the first

electronic computer to have

pipelining, and the first electronic

computer to have input and output

operated concurrently and independently of computing!

Word of the design gets around

Graduation and off to IBM

Design of the IBM 704

Design of the IBM 709

Design of the STRETCH

At this time (mid 1955)

I was surprised to have a man

assigned to my STRETCH project.

I initially assumed he reported to

me, but it became clear that he

thought I reported to him. This

was very disconcerting, for I had

been assured that STRETCH was

my project before I accepted the

assignment and had then gotten

Los Alamos to the negotiating table

and had achieved quite a bit of the

design. I wasn't certain I had the

situation figured out for sure, so I

continued on.

I was appalled, for

I knew we could never agree, and

the project would fail. I didn't

respond about my reaction; I just

went back to my office and wrote

my letter of resignation. I did

continue on until just before

Christmas, providing my best

design ideas, all of which were

lost, then left for South Dakota for

Christmas with my and my wife's

families, then on to Los Angeles to

join Ramo-Wooldridge's computer

division.

Resignation, on to Ramo-Woolridge

Aeronutronics

Back to IBM

and the SPREAD committee

Architecting the IBM 360 Family

To meet the performance and

cost constraints, the small

machines had to use memory locations as registers in appropriate

eases, where the larger machines

could use hardware registers. I

also discovered that there had to

be some portion of the architecture

that had to be reminiscent of each

of the two significant families that

we were replacing, otherwise the

designers from those families

couldn't develop the confidence

that the design would be acceptable in their market segment. This

resulted in decimal operations

being memory to memory rather

than in registers like the 1401 and

indexing very similar to the 7094.

to the West Coast with IBM and Stanford

In January [1965] I taught computer design at Stanford; this was

quite interesting, for I experienced

quite a large range in the ease that

the students had in their grasp of the

material. I never determined the reason, for I had no knowledge of their

previous experiences. The second

quarter I taught was concentrated on

the analysis and explanations for the

performance of a cache memory in

enhancing the speed of the computer. It was not too well organized, for

I was trying to increase my own

understanding. Concurrently I was

working on a number of my pet

problems at the IBM lab in Los

Gatos, with remarkable success.

made IBM Fellow and Advanced Computer Systems.

(Q) How did you fare in the design challenge and the consequences?

ACS Lab closed

Starting Amdahl

(Q) How did you get your first

start-up money?

Staffing, and the 100 Gate Package

More Financing

Intellectual Property?,

Speed of Development

(Q) Why did you think you could compete with IBM when RCA and GE couldn't?

Use IBM's Operating System(s)

Sales and IBM Response

(Q) How did IBM respond to your success?

(Q) How did you expand your market into Europe?

(Q) Your relationship with Fujitsu was so strong; did you ever consider tempering it?

(Q) Did your efforts affect your health?

Trilogy

(Q) If incurring so many problems in building a semi-conductor facility, how could you have done it

differently?

(Q) What was it about a start-up company that made it so attractive to you?

Amdahl's "Law"

During this time, I had access to

tape storage programs and data history for commercial, scientific, engineering and university computing

centers for the 704 through the

7094. This gave insight on relative

usage of the various instructions

and a most interesting statistic --

each of these computing center

work load histories showed that

In 1967 I was asked by IBM to

give a talk at the Spring Joint Computer Conference to be held on the

east coast. The purpose was for

me to compare the computing

potential of a super uniprocessor

to that of a unique quasi-parallel

computer, the Illiac IV, proposed

by a Mr. Slotnik.

The proposed Illiac IV had a single instruction unit (I-unit) driving

16 arithmetic units (E-units). Each

E-unit provided its own data

addresses and determined whether

or not to participate in the execution of the I-unit's current instruction,

an interesting, but controversial proposal. The super uniprocessor was a

design type, not a specific machine, so I had to estimate to

the best of my ability what performance could reasonably he achieved

by such a design.

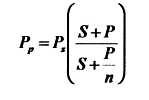

These Figures are not quite the same as in the 1967 presentation, for

they weren't published, nor did I keep them, for I had no expectation

of the intensity of their afterlife! I never called this formula "Amdahl's

Law" nor did I hear it called that for several years; I merely considered it

an upper limit performance for a computer with ONE I-unit and N E-units running problems under the

control of that time period's operating system!

Several years later I was informed of a proof that Amdahl's Law was invalidated by someone at

Los Alamos, where a number of computers interconnected as an N-cube

by communication lines, but with each computer also connected to I/O

devices for loading the operating system, initial data, and results. This

made all control and data movement

to be carried out in parallel.

There has been no publicity

about the capability of the actual

Illiac IV. I did hear unofficially that

it was unable to be successfully

debugged at the University of Illinois and that it was shipped to the

NASA facility here in Sunnyvale

where debugging was being carried out by volunteers. I heard a

few months later that they had gotten it to work and had executed a

test program, but that no information on its performance had been

made available. I'm not certain

that this information was entirely

accurate so I cannot vouch for it.

This is a picture of me in front of the

entrance hall of the house I built so I

could finish my undergraduate

degree. I even had to make the front

door! The date of the photograph is

1948.

in the fastest member, the

model 90's there were three very

powerful ones, loop trapping,

associated with look-ahead,

planned by Dr. Tien Chi Chen and

myself, then virtual registers (registers assigned when and where

needed) and linked arithmetic

units, so results of one arithmetic

unit could become an input to

another arithmetic unit without

any intervening register storage;

these were planned by the regular

design team.

(A) I presented my alternative to

the project managers only to have

it rejected out of hand, for they

were wedded to the architecture

they had developed. I was pondering how to separate thyself from

the impending loss leader when

their top logic designer got into

some trouble. The managers considered him unmanageable, but

couldn't fire him so they found the solution, transfer him to me! I was

delighted for he was responsible for the design of the most performance determining part of

their computer. I knew that if he did the

design of that part of the 360 alternative, there could be no charges

of faulty design. It took a bit over

two weeks to describe enough of

my performance approaches

before he recognized that it was

really feasible to compete with the

other design.

(Q) What happened after the ACS lab was closed?

(A) Just after the shoot-out, about

two thirds of the employees left

IBM, most of them forming a startup venture in designing a time-sharing computer; they got about 18

months worth of capital investment.

A small group started a semiconductor company to develop field effect

transistor memory chips for add-on

memory for IBM computers and also

an ECL memory chip for cache

memories. I stayed on at IBM analyzing the performance of computing systems as a function of

memory size and disk and tape storage

units in the environment of multiprogramming. While I was doing

this, IBM management learned that a

company called Compat had

announced a minicomputer. They

had granted me permission to be on

the board of my brother's consulting

company, Compata, and immediately assumed it was Compat. Their

discussions went on for two or three months without ever asking me

before they recognized that it wasn't Compata; however emotions had

reached such a fever pitch that they sent me a letter demanding that I

resign from Compata's board, for it didn't look good that an IBM

employee was on the board of another company in the computer

field. I felt that my name had value

to him, and as well I was "hot under the collar" about IBM's handling of

the ACS project, so in September 1970 I wrote a letter explaining my

position and was resigning from IBM rather than my brother's board.

I also informed them that I intended to start my own large computer

company! The president of my division tried to talk me out of it, for

there was no money to be made in

large computers!

(Q) Why and how did you decide to start Amdahl Corporation?

(A) Ray Williams, the ACS financial

man, was aware of my anger and

disgust and came to me with the

information that he had some contacts in the venture capital world.

He proposed that we immediately

develop a business plan, and he'd

arrange meetings with the VCs. We

took about three weeks to do an

analysis of the formidable task of

competing head on with IBM, for

we intended to be compatible with

IBM and, in fact, use their operating system (we knew IBM had

decided to lease it independently

of the mainframe to reduce their

antitrust risk).

(A) I then traveled to Japan, invited by Fujitsu, to give several lectures on computers to their

engineers and to their board of directors. I had known several of their

top people for three or four years

and had great respect for them.

(Q) Did you have problems staffing and designing a competitive computer?

(A) We were asked by the ACS

start-up people to agree not to

make employment offers until they

had given up hope of getting more

capital; we agreed, and in early

January 22 of their people listened

to our plan and joined, so we were

up and running.

Dr. Amdahl holding a 100gate L51 air-cooled chip. On his desk is a circuit board

with the chips on it. This circuit board was for an Amdahl 470 V/6 (photograph

dated March 1973).

(Q) How did you finance such a demanding undertaking?

(A) During these early days Fujitsu

friends would drop by from time

to time. They never asked much

about our progress but they must

have sensed our growing confidence, for in late spring they asked

if we would consider an investment from them; they felt it would

need about 5 days of presentation

to evaluate us thoroughly, and

they would sign an agreement to

protect our technology. We agreed

and presented for three days. On

the fourth clay they stopped us

saying they fully believed. They

invested S5 million and sent 20

engineers to assist in the development. Shortly after the presentation our LSI chips came back, and

they performed just as predicted!

We went on trying to raise more

capital, but no venture capital firm

believed we could compete with

IBM. It was difficult to argue the

case since RCA, General Electric,

Xerox, and Philco were all getting

out of computers; RCA and General Electric had each spent about $5

billion and were giving up! A surprise visit by Heinz Nixdorf from

Germany was exciting, for after a

few hours he agreed to put in $5

million. This also excited Fujitsu,

for they decided to invest an additional $5 million! These events

stirred the venture capital people

to invest $7.8 million!

(Q) How did you avoid encroaching on other IBM patents and other technical property?

(A) When we started the design of

our computer I reminded everybody that we were all bound by

our agreements with IBM not to

use any of their intellectual property, but that if we used only the

descriptions in the IBM's publicly

provided user's manual to do our

designs, it would be free of conflict. Fortunately none of the

designers had ever designed a 360

computer, so that manual was necessary and there was no carryover

of 360 logic detail. I had a friend

in IBM's legal staff who later

informed me that IBM had made

two in-depth investigations of our

product to determine if there was

any misappropriation of IBM property, but decided we were clean as

a hound's tooth, however clean

that is. We also had to test the

availability of the IBM operating

system licensed to our computer.

We ordered it, and it took IBM

almost two months to decide they

had to do it, but they did! In short,

we didn't do anything quite like

IBM's patent coverage, and we

took advantage of their dropping

their tie-in software policy as well

as their well-defined market place!

(Q) How could you develop a product so much faster than IBM's?

(A) The technology in our computer was much more advanced than

IBM's, for we had opted for the LSI

chip with 100 gates rather than the

MSI (medium scale integration)

with only 35 gates. This meant we

could avoid nearly two thirds of

the chip crossings, which would

cost significant time delays in the

logic paths involved. This also

reduced the size of the machine,

and each foot of wire cost 1

nanosecond. We also designed a

simpler machine, by a more orderly, but not slower, instruction execution sequence like I used in the

WISC. There was also another factor, based on IBM's market management approach, where they

avoided too great an advance in

technology upgrades, for users

could drop down one member of

the 360 family if the smaller member was fast enough. Amdahl's

offering was a bit more than three

times faster than IBM's large member, and we priced it most competitively, for we had to overcome

customer's management that IBM

was the only safe decision.

Amdahl computers during that

time utilized IBM instruction sets

that could employ the IBM operating system, which was almost universal in the computing marketplace.

Consequently, Amdahls

didn't contain architectural

advances which altered instruction

results, but did contain pipelining

as in the WISC and had much

more advanced technology, such

as LSI (Large Scale Integration)

with air cooling, a world first

(developed by Fred Buelow),

rather than IBM's MSI (Medium

Scale Integration) with water cooling. Amdahl also included another world first, remote diagnostics,

called "Amdac," invented by the

field engineers.

Photograph of the Amdahl 470 V/6.

(A)

IBM's earlier competitors

developed their offerings while

IBM was "bundling" its software

with its hardware, therefore the

competitor had to develop its own

software. RCA designed a machine

nearly compatible with IBM's and

software that was also quite similar; however, the deviations from

IBM's hardware were carefully

designed to appear easy to move

to, but not appear too difficult to

return to IBM if they didn't like it.

RCA, however, had made it quite

difficult to return, for they considered it to be the "barb" on their

fishhook! Being later and IBM

insiders, we had the advantage to

plan on IBM having to maintain its

unbundling; however, the Venture

Capital world was unable to readjust its thinking when the new

strategy was presented by an aspiring startup! Some even thought

we couldn't design an IBM compatible computer since RCA could-

n't! The cost of developing our

own operating system and other

supporting software would have

well more than doubled our capital requirements!

(Q) Why could you plan on using the IBM operating system?

(A)

When IBM decided that they

were in serious risk of an antitrust

action for offering their hardware

complete with all of the software,

thus virtually keeping any other

supplier from being able to make

an economically attractive offer in

this marketplace, they decided the

separation of their software pack-

age from the hardware would not

be too costly, as long as the soft-

ware package was kept bundled

(this is my guess, for I was not

involved in any decision-making).

The pricing of the software bundle

was also economical enough to

discourage competition. IBM also

did not make too big a public

announcement as far as my recollection of the event, for the VCs

didn't seem aware of it. IBM also

took quite a bit of time to decide

they had to honor our order for

their software package to be

licensed to an Amdahl computer,

but I was convinced they had to or

the antitrust threat would immediately materialize!

(Q) How did Amdahl's marketing results progress?

(A)

The initial market penetration

by Amdahl was its first sale to the

NASA space computing center in

New York, where we were

allowed to being installation on

Friday night, with the expectation

that it would take about a week

and a half, like IBM required, but

were astonished when they were

informed on Sunday noon that the

computer was ready for use!

(A)

The next move by IBM was the

announcement of a new 360

improved family, the first to be the

3030 (I'm sure you hunters can

recognize the significance of that

choice of number). This machine

was to be equal in speed to

Amdahl's and was to be priced

30% lower than ours! Immediately

we analyzed what we had to do to

respond. We came up with an

improvement of our own, including a smaller version to expand the

market we addressed. We also had

to negotiate with Fujitsu to get

lower prices on their manufactured

parts (their manufacturing had

been very profitable, and with a

smaller version, they could reduce

their prices and still fare as well).

With this plan, we were able to

maintain our 30% pretax profit in

spite of IBM's attempt to "mow our

grass to ground level". Over time

our competition reduced the cost

of computing for the mainframe

customers by over an order of

magnitude! IBM retaliated in Japan

by calling on the government to

limit the use of their architecture

and software there, or they would

reduce the prices in Japan to kill

off the Japanese computer companies, or so the government

informed Fujitsu of this.

(A) Nixdorf had not been a significant player, for their marketing

people had only had experience

selling small machines, and they

decided not to try to make a

chance as drastic as would be

required, so they sold their stock

for a very significant profit.

Amdahl entered the European

market, first in Germany upon

receiving an inquiry and visit from

the Max Planck Institute in Munich

and from the European Space

Agency in Ober Pfaffen Hofen

(with Nixdorf's blessing and assistance), then in Norway where I

was questioned about my recollections of my Norwegian roots and

coerced into singing a song written

by the immigrants (this was publicized in Fortune Magazine under

the title "A Frog Sings in Norway").

Italy came to us in the person of

the former IBM country manager

who now had responsibility for all

central government computing,

and who couldn't get a deal from

IBM. In France I struck a deal

where we would get import licenses for any sale we could make if

we could have anything made for

our computer in a factory in

Toulouse. Being an inveterate punster I informed my VP of engineering that to get the proper picture

he should make "la trek Toulouse".

We were quoted a price for memory which was slightly less than it

cost us to make it! Britain was easy

to enter, but later.

(A) I was concerned that our

dependence on Fujitsu was in danger of making us effectively a subsidiary, and I felt that the only way

we could be independent would

be to find an alternative supplier of

new and much denser chips for

our new advanced computer offerings. I was unable to get support

from the engineering staff, for they

felt they were not capable of dealing with some of the problems that

might come up. In the meantime

Fujitsu heard of this and began to

try to make me stop agitating,

accept their new planned chips. It

got bad enough that the president

came to Sunnyvale [California] and

verbally chewed me out. I had

wanted a reduced dependence on

Fujitsu, not a separation from

them, for I was very much mindful

that without them Amdahl would

never have survived! I also had

quite a number of very close

Japanese friends, and I still have

them today. I must also say that

Fujitsu treated the company very

fairly for the rest of its existence.

(A) The stress of this struggle was

so severe that my back went into

spasm. Some twelve years earlier

I had ruptured a disc, and it had

healed, but it had still remained

very sensitive. I realized that this

spasm was so severe that I couldn't go back to work for quite a

long time, so I decided it was best

to resign rather than continue

struggling.

(Q) What did you think you could do as a follow-on to Amdahl Corporation?

(A) My back took about eight

months to get back to near normality. During that time I pondered

what I should do when healed.

Carl and I brainstormed an interesting approach to very large scale

integration, which we felt could

make a wafer-size chip! I mentioned it to Clifford Madden,

Amdahl's VP of Finance. He got so

excited that he insisted that the

three of us should start a new company!

(A) I was chairman of the board, for

I still had to protect my back, Clifford was president, and Carl was

head of engineering. We named the

company Trilogy, for the technique

employed to make the wafer-scale

integration with high yield was to

use triplet gates, where it was possible to test each gate and be able to

remove one, or even two, of the

gates if they were faulty, thus assuring an effectively working gate

unless all three were faulty. The

financial planning community

became wildly excited, and we

managed to acquire over $100 million. Carl and I had planned to

have a semiconductor company

process the chips, but some of the

things we would have to do weren't

standard, so the president decided

we'd have to build our own facilities. The building of the super clean

semiconductor facility was delayed

during construction by unusually

heavy and extended rains, so the

costs mounted more rapidly than

planned. The complexity of the

routing program software, depositing enough metal for high power

distribution and good bonding of

the chip to the chip carrier were

solved, but took extra time. The

only problem we hadn't completely

solved was the leakage of etching

fluids through layers of interconnection, (the universal problem for all

semiconductor companies). We estimated that two more passes of making the chip, testing for leakage

faults, determining how to modify

the masks to fix it and making the

new masks would take about 24

months. The costs of the delays had

reduced our capital so much that

only about 24 months of run rate

were left! We had proven to our

satisfaction that we could do the

wafer sized chip, for we had made

three-quarters of the wafer successfully, but by the time we could successfully produce our full chip

repeatedly, we would have no

money left to exploit it, and we felt

certain we could not raise more

money! Carl suggested we could

successfully produce 1/4 size chips

and design a small product using

them. I felt that the level of revenue

we could achieve with that

approach could hardly keep us

afloat, so I contacted some of our

principal investors and asked what

they would recommend. They

asked us to acquire a company with

a computer product that would benefit from our remaining funds, so

we did that. The negative publicity

from this was as large as the positive

publicity when we started!

(A) If I had the chance to do it all

over again I would first offer

enough money to a semiconductor

company to compensate for solving

the nonstandard processes. If that

wouldn't work, I would take Carl's

suggestion and see if we could sell

the product design and chip availability to stay in business. These

might not have worked, but if they

did we could have made a significant success! I strongly enjoyed the

atmosphere of cooperative enthusiasm in the start-up adventure!

These latter two properties I

determined in 1969, when I privately estimated that System 360 would

have to change the address length

to exceed about 15 MIPS (Million

Instructions Per Second).